Book Summary of Superforecasting

Superforecasting is about how those top forecasters think about the world. Here are my key takeaways of the book.

Jazon Zweig said Superforecasting by Philip Tetlock and Dan Gardner is “the most important book on decision making since Kahneman’s Thinking, Fast and Slow”.

Tetlock co-created The Good Judgement Project (GJP) which participated in a forecasting tournament held by IARPA, a U.S. government organization. IARPA supports research that has the potential to revolutionize intelligence analysis. The GJP won the tournament and its forecasters were 30% better than intelligence officers with access to classified info.1 Superforecasting is about how those top forecasters think about the world. Here are my key takeaways of the book:

- What makes a good forecast: Forecasts need concrete timelines and probabilities, not open-ended guesses like “this could happen.” Good forecasts have high calibration (forecasted probabilities match reality) and high resolution (forecasts aren’t overly conservative or extreme). Good forecasters first take the outside view and then go in-depth. An outside view considers the base case for an event. For example, a President’s approval rating typically rises after they leave office – so it’s not unique if that happens.

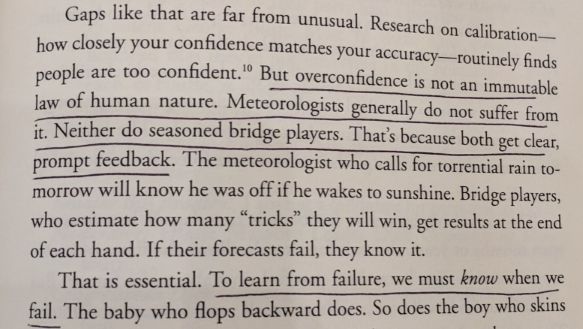

- Forecasting ability depends on the domain: Good forecasters know when they’re right or wrong. It’s impossible to know this without clear feedback. Meteorologists and bridge players get clear feedback on when they’re wrong, but it’s tougher to judge a forecast in more subjective fields.

- On the wisdom of crowds: Aggregating the judgement of many people frequently beats the accuracy of the average group member. This doesn’t mean crowds are always smarter – aggregating the judgements of people who know nothing produces nothing. A group of people from different backgrounds increases the probability that some members have info others members don’t. This pools together knowledge and improves the crowd’s accuracy.

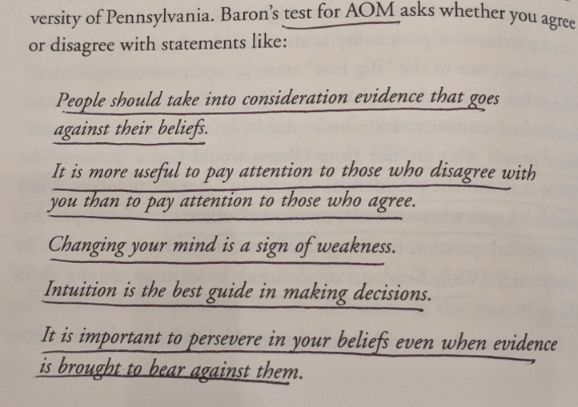

- Open-mindedness is an important skill: There was a correlation between a forecasting team’s open-mindedness and its accuracy. Confirmation bias undermines our ability to have an open mind. We’d rather read articles that support our opinions than read evidence that makes us feel wrong . If someone can’t answer “what would convince me I’m wrong?”, they’re too attached to their view.

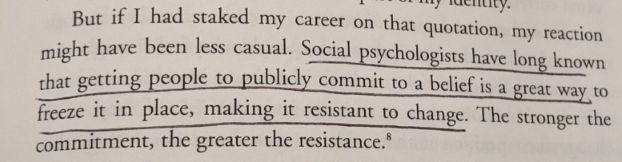

- Experts don’t think differently than everyday people: Experts don’t like to admit they’re wrong. Popular experts are even more likely to stick to a forecast (despite contrary evidence) since publicly committing to a belief tends to freeze it in place.

- How to separate luck from skill: We don’t think that lottery winners are skilled ticket pickers, but we do think that some investors are skilled stock pickers. Regression to the mean can test the role of luck. Slow regression is more often seen in activities dominated by skill, while faster regression is more associated with luck.2 Stock pickers exhibit substantial regression to the mean – top performing managers over the past few years consistently revert to the mean and underperform over the next few years.3

- Why we love stories: Humans want answers and hate uncertainty. The truth about investing is that nobody knows what will happen in the short-term. Portfolios should be constructed around facts we know to be true – not opinions or feelings. The most basic facts of investing are that diversification works, costs should be low, and a simple portfolio is easier to maintain than a complex one.

- The profile of a superforecaster: Their baseline outlook is that nothing is certain and life is complex. They’re actively open-minded and think hypotheses should be tested, not protected. They don’t have an agenda and change their mind when the facts change. They have a growth mindset and can persevere when the work gets hard.

Overall, Superforecasting was a worthwhile read. There’s also a great video series with Tetlock and Kahneman discussing the book.